Hi All,

This post will be an introduction to the Global Parameters available in Azure Data Factory V2.

It’s been a while since my last post, and I hope anyone following is doing well where ever they are.

After months of lockdown, and a heavier than normal workload I finally have some time to myself to post another blog.

No excuses really, it’s been over 2 years since the last one!

From my experience so far, the Global Parameters are a great addition to the functionality available in ADFv2.

As a factory has more and more pipelines added, the maintenance and configuration of parameters becomes cumbersome, especially when you nest your pipelines.

Global Parameters are constant through the factory. Once they are set, you can use them anywhere you use expressions.

Pre-requisites if you want to work through this blog, will be an Azure SQL Server and Database, and a Key Vault to hold credentials.

The Data Factory you use will need GET in the Secret permissions access policy on the Key Vault.

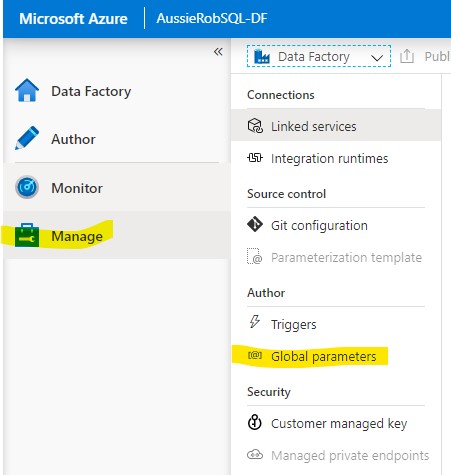

To start, Global Parameters are available in the Manage section.

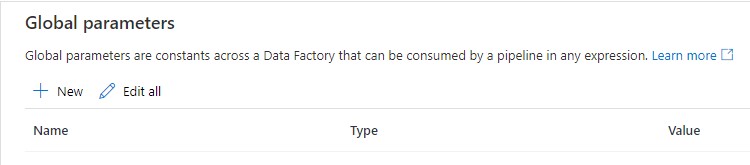

There are 2 options here. + New and Edit all

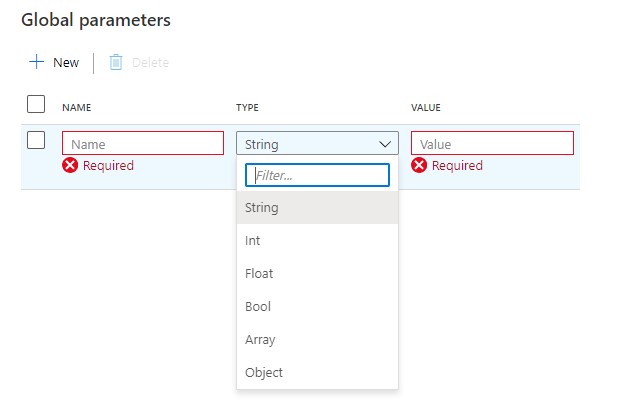

I’ll work in Edit all as I can edit multiple parameters at a time. Clicking on + New you are presented with the following.

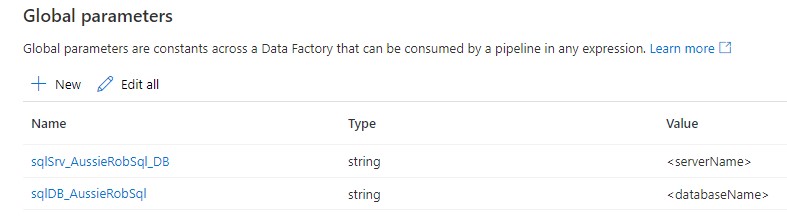

For this example, let’s work with two parameters that I used most in my pipelines. SQL Server Name, and SQL Server Database Name.

Add two parameters

– sqlSrv_<identifier>_<type> where identifier is a way to identify the server name, and type is DB or DWH (yeah I know it’s Synapse Analytics now, but I still use DWH)

– sql<type>_<identifier> where type is DB / DWH and identifier is the same as the one used for the sqlSrv parameter.

Publish the pipeline and these are now ready to use in a pipeline!

If you haven’t yet done so, add a Key Vault linked service to the Data Factory. In my example, I am using AzureKeyVault as the linked service name, and publish.

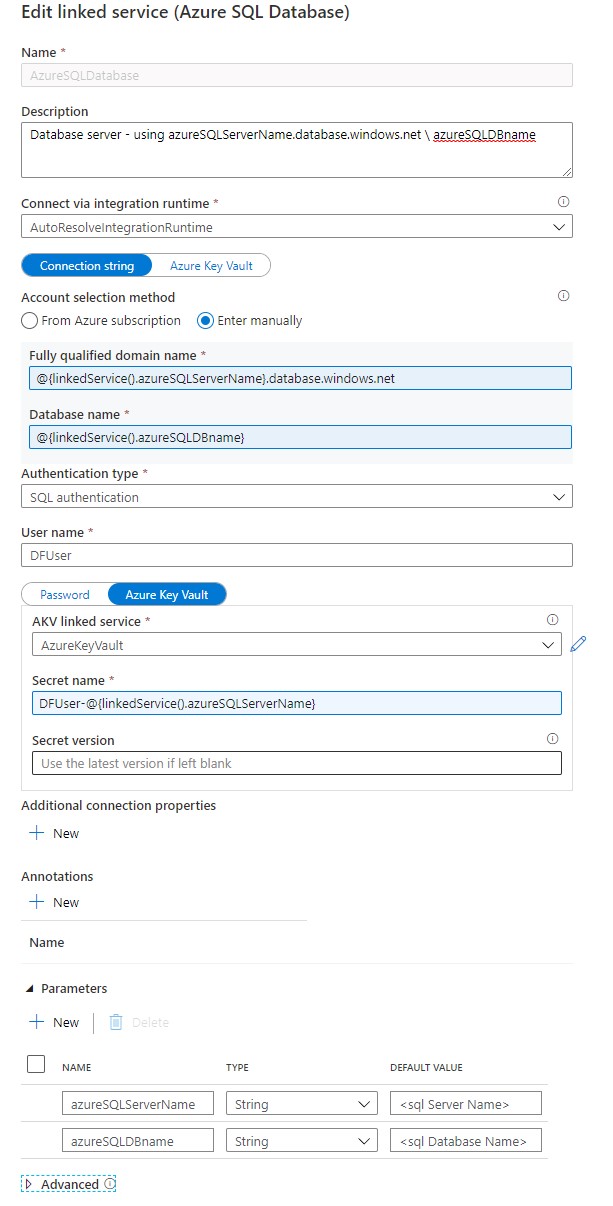

Here is the linked service configuration I use for SQL Server. It uses ‘DFUser’ as the user name for the SQL database, but this can be changed to the value you want.

I also use DFUser-<sql Server Name> as the Secret name in the Key Vault to store the SQL password.

Source for my linked service:

{

"name": "AzureSQLDatabase",

"type": "Microsoft.DataFactory/factories/linkedservices",

"properties": {

"parameters": {

"azureSQLServerName": {

"type": "string",

"defaultValue": "<sql Server Name>"

},

"azureSQLDBname": {

"type": "string",

"defaultValue": "<sql Database Name>"

}

},

"annotations": [],

"type": "AzureSqlDatabase",

"typeProperties": {

"connectionString": "Integrated Security=False;Encrypt=True;Connection Timeout=30;Data Source=@{linkedService().azureSQLServerName}.database.windows.net;Initial Catalog=@{linkedService().azureSQLDBname};User ID=DFUser",

"password": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "AzureKeyVault",

"type": "LinkedServiceReference"

},

"secretName": "DFUser-@{linkedService().azureSQLServerName}"

}

},

"description": "Database server - using azureSQLServerName.database.windows.net \\ azureSQLDBname"

}

}

Now we have Global Parameters, and some Linked services available.

For my example, let’s run a simple SQL select using a Lookup activity.

To start, jump to the Author Section and create a new Dataset. In this example, I’m using an Azure SQL Database dataset. Here is my source (replace identifier as required):

{

"name": "sqlDB_<identifier>__connection",

"properties": {

"linkedServiceName": {

"referenceName": "AzureSQLDatabase",

"type": "LinkedServiceReference",

"parameters": {

"azureSQLServerName": {

"value": "@toUpper(dataset().sqlSrv_<identifier>)",

"type": "Expression"

},

"azureSQLDBname": {

"value": "@dataset().sqlDB_<identifier>",

"type": "Expression"

}

}

},

"parameters": {

"sqlSrv_<identifier>": {

"type": "string"

},

"sqlDB_<identifier>": {

"type": "string"

}

},

"annotations": [],

"type": "AzureSqlTable",

"schema": []

}

}

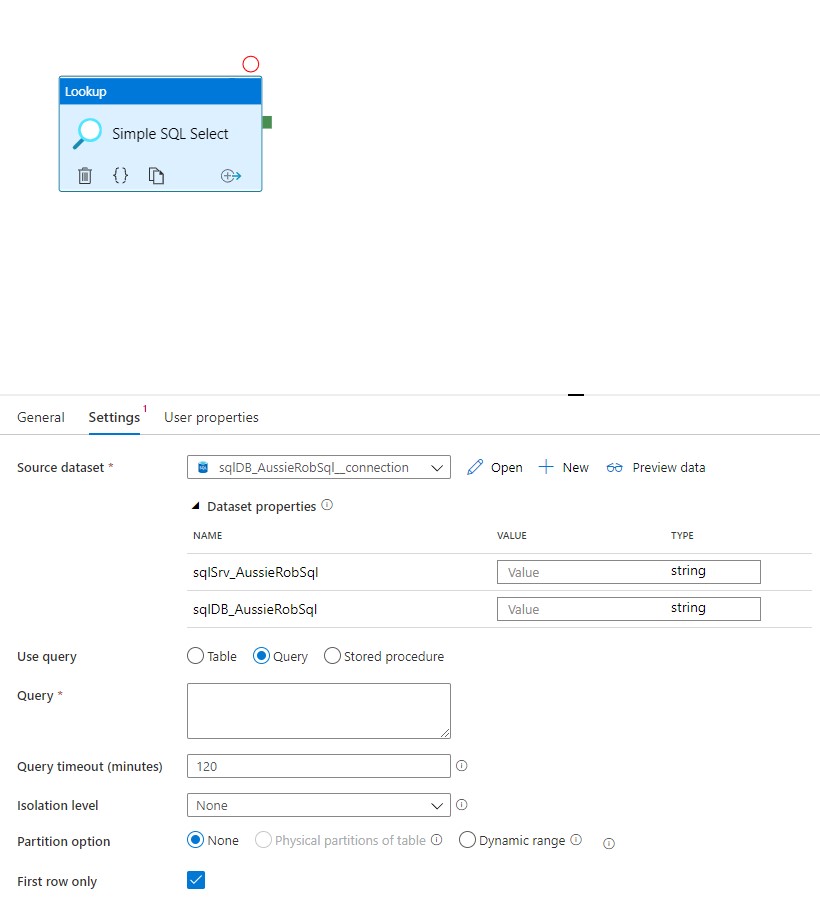

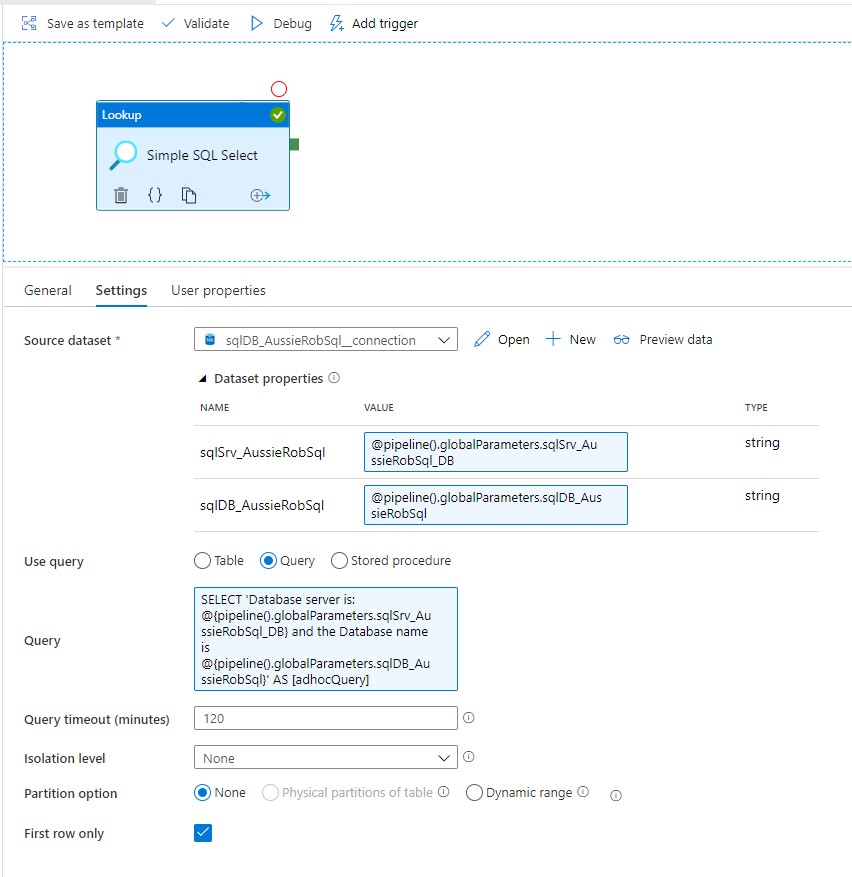

Then, create a new pipeline, and drag a Lookup activity into the canvas, and select the Source Dataset created previously.

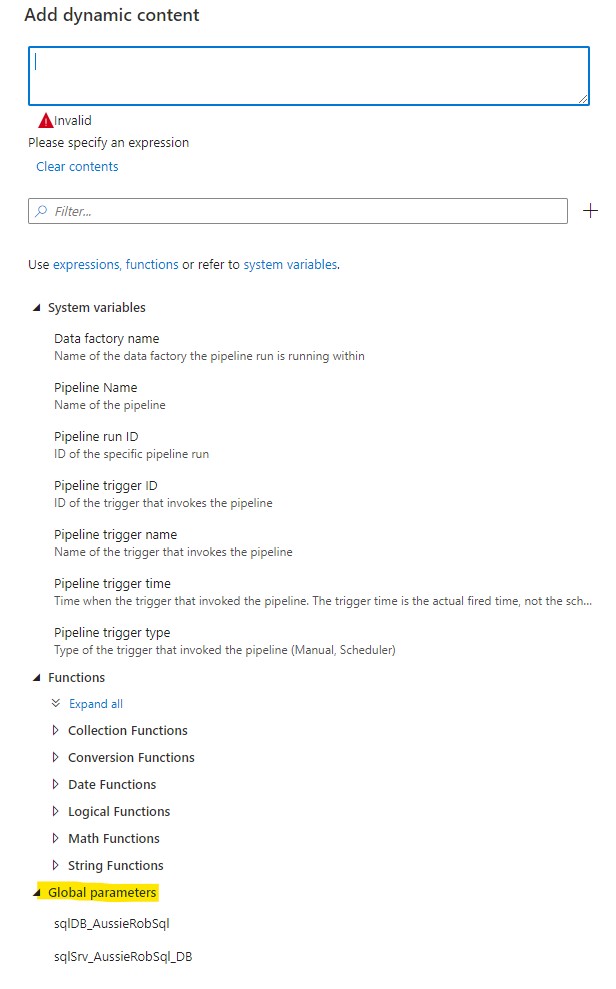

Now click in the sqlSrv_<identifier> value in the Dataset parameter list, and select Add dynamic content

Toward to bottom of the Add dynamic content window, the Global Parameters will be at the bottom.

From here, we can select the parameter, or fill in the expression with (replace identifier as required):

@pipeline().globalParameters.sqlSrv_<identifier>_<type>;

Do the same for the second Dataset parameter, choosing the required Global parameter.

For the query, let’s use an expression to return the values of the Global parameters.

Click on Query and select Add dynamic content and paste the following and click finish when done.

SELECT 'Database server is: @{pipeline().globalParameters.sqlSrv_<identifier_<type>} and the Database name is @{pipeline().globalParameters.sqlDB_<identifier>}' AS [adhocQuery]

Your Lookup activity should look like this.

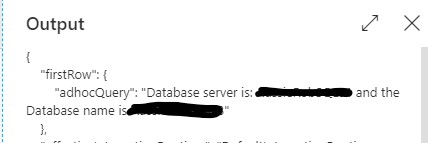

Publish the pipeline, and hit the Debug button to run the pipeline.

When it’s finished, click on the output of the Lookup activity, and we should see

And that is a simple activity using a Global parameter!

One thing to note about Global Parameters, is they are deployed at the Factory level when using ARM templates. If you export the ARM template from the factory and open the archive, you will see a factory folder that contains the template and the parameter file.

When deploying you will need to deploy the ARM template from the factory folder first, and then deploy the normal ARM template for the remainder of the components.

That’s it for today. Stay safe everyone.

-rob